Technology & Privacy

How AI-Generated Content Laws Are Changing Across the Country

February 12, 2026 | Max Rieper

October 8, 2025 | Sandy Dornsife

Key Takeaways:

In recent years, states have honed in on laws that place limitations on the use of social media by minors. Such laws tend to fall into a few distinct categories including: (1) Bans on the ability of minors to open social media accounts and/or requiring parental consent to open such accounts; (2) Prohibiting social media platforms from using certain algorithms; (3) Imposing specific liability on social media platforms for harm caused to minors; (4) Requiring warning labels to be displayed on platforms; and (5) Establishing Commissions or Committees to study and make policy recommendations regarding social media and youth mental health. The 2025 legislative session saw the introduction of a significant amount of legislation in all of these categories.

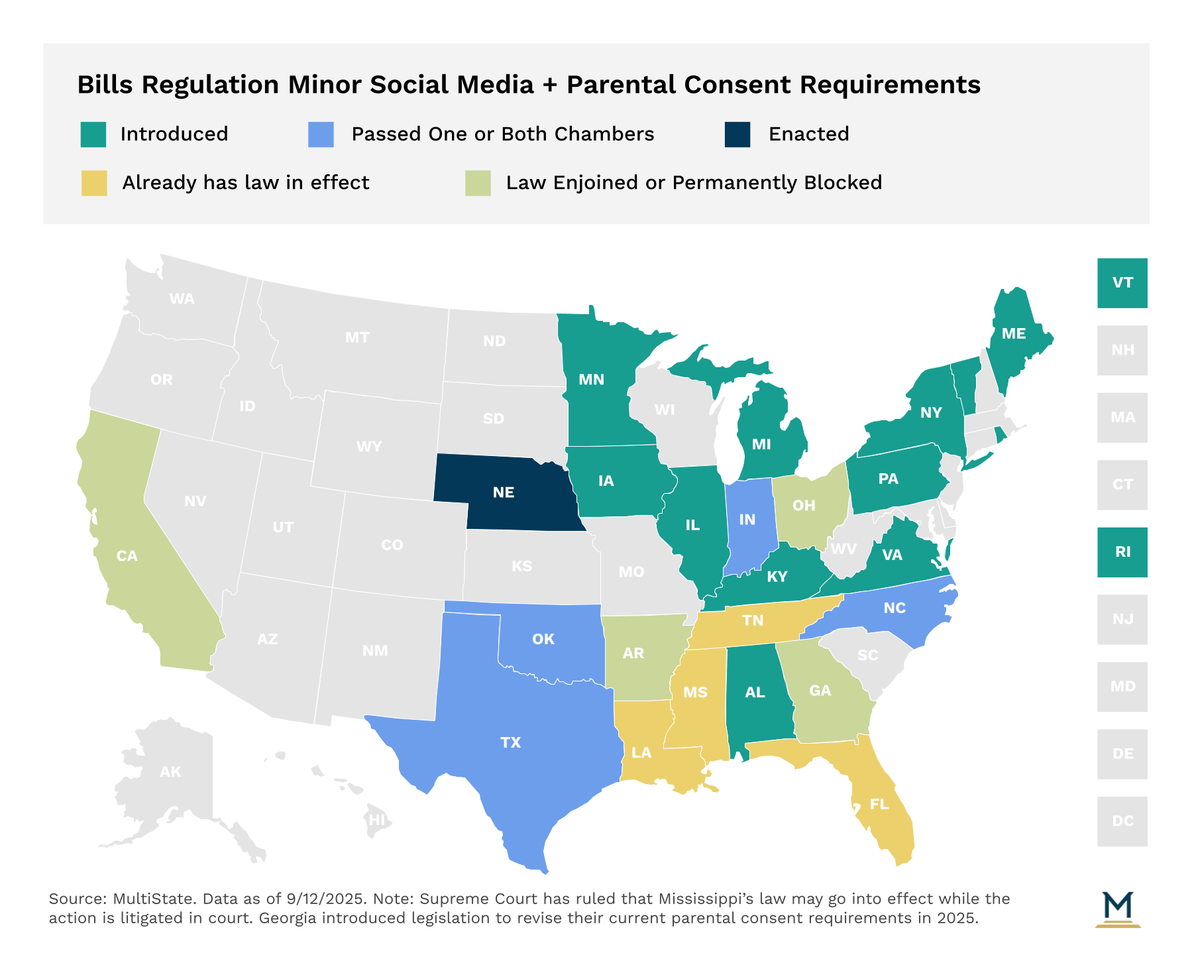

Currently, eight states (Arkansas, California, Florida, Georgia, Louisiana, Mississippi, Ohio, and Tennessee) have enacted legislation that either bans minors from obtaining social media accounts outright and/or requires minors of certain ages to obtain parental consent in order to open accounts. During the 2025 state legislative session, eighteen states attempted to enact their own versions of this legislation through almost thirty bills. Nine states proposed an absolute ban on social media accounts for certain minors; however, the age at which the ban kicked in varied by state. Some states, like Kentucky, Maine, and North Carolina, only prohibited minors under the age of 14 from opening accounts, while other states implemented higher age thresholds, such as Texas, which restricted anyone under the age of 18. Additionally, legislation in fifteen states would have required parental consent for minors to obtain social media accounts as an alternative to a ban for minors of certain ages, ranging from sixteen and under in Michigan and Minnesota to eighteen and under in Indiana. Only one of these bills was actually enacted; however, it was one of the strictest bans in the nation; a bill in Nebraska requiring parental consent for minors under 18 to open social media accounts. Virginia, however, was able to enact a bill that stopped just short of a social media ban by prohibiting minors from accessing social media for more than one hour per day unless given parental consent.

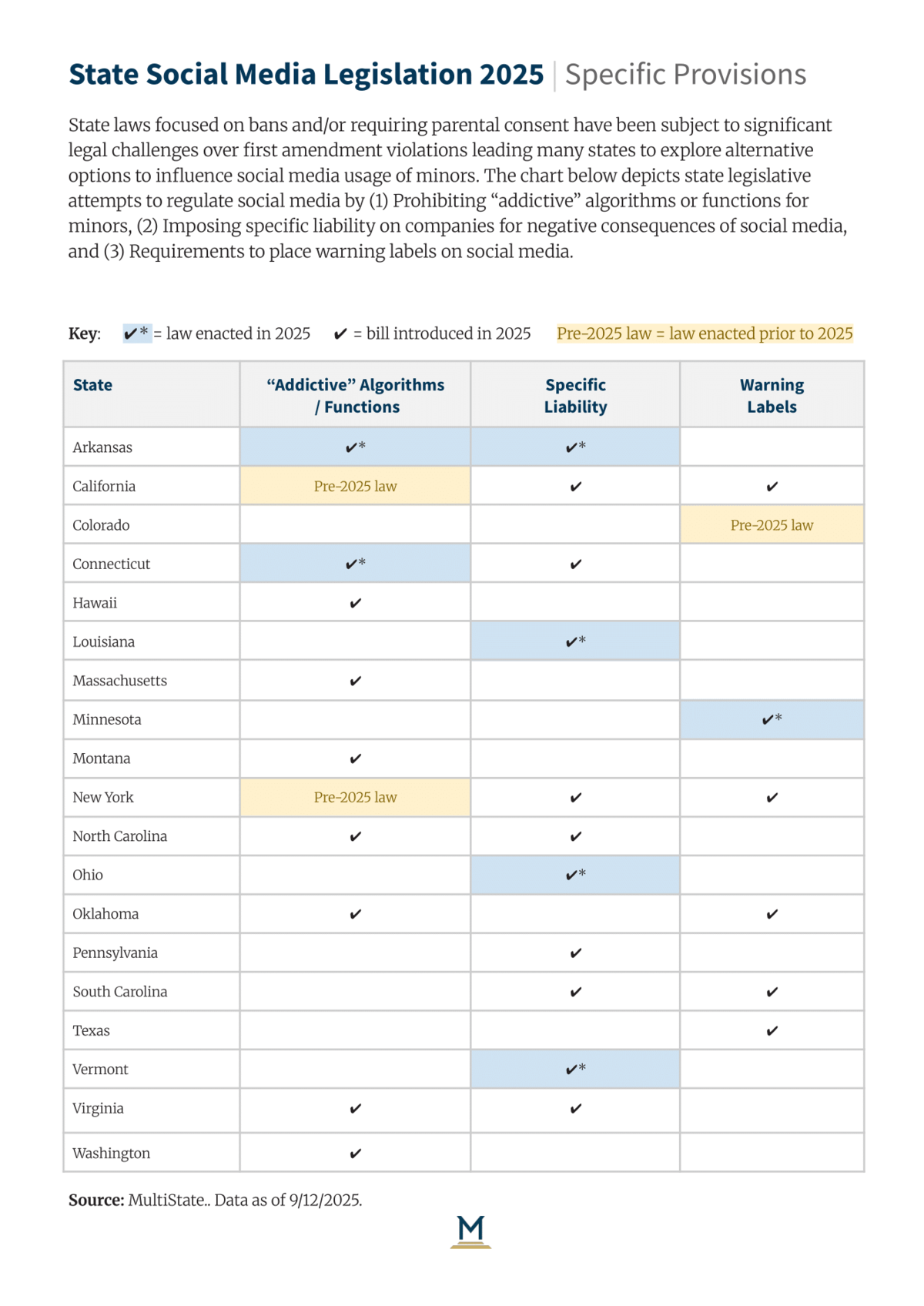

In 2024, California and New York became the first states in the nation to attempt to combat negative mental health consequences of social media by prohibiting the use of “addictive algorithms” or features designed to increase use in relation to minor accounts. California and New York both prohibit the operator of an addictive internet-based service or application from providing an addictive feed to minors without parental consent; and California asserts that even parental consent does not shield a provider from liability for harm caused to a minor from their service. While California’s law was challenged in court, a federal judge recently permitted the law to go into effect while the case is being tried. This year, Arkansas, Connecticut, Hawaii, Maine, Montana, North Carolina, Virginia, and Washington all tried to follow New York and California’s lead with their own addictive feed legislation. Connecticut successfully enacted its bill to prohibit social media platforms from using features designed to increase use by minors, and Arkansas replaced its previous social media law with provisions that included a ban on certain addictive functions for minors. Washington’s and Montana’s legislation also saw some limited success, with both making it through the first house of the legislature, but ultimately failed to pass this year

Currently, individuals seeking to hold social media platforms legally responsible for mental health consequences rely on product liability and negligence arguments with limited success, which has led several states to pursue policies that impose specific standards of care for social media platforms, in the hopes of encouraging companies to take preventative actions. This year, eleven states attempted to enact such policies. Companion bills in New York (NY S 4561/NY A 3335) sought to establish liability for “interactive computer service providers” for knowingly or negligently promoting injurious content to minors, a bill in California held social media platforms responsible for damages caused by their failure to exercise ordinary care or skill, and Oklahoma legislation created a cause of action against a social media platform for adverse mental health consequences of excessive use. Of the twenty bills introduced in 2025, however, only bills in Arkansas and Vermont were enacted. Arkansas’s law was actually a direct attempt to circumvent the First Amendment claims that thwarted its previous social media platform legislation, while also adding a private right for parents to hold social media platforms liable for any harm caused. Vermont’s legislation requires a covered business that has products likely to be accessed by minors to provide a minimum duty of care to use personal data and design of an online service in a way that does not result in emotional distress, compulsive use, or discrimination.

An alternative avenue that some states chose to pursue to “protect” children from social media harm is the imposition of required warning labels. Last year, U.S. Surgeon General Murthy under President Biden advocated for warning labels to be placed on social media sites to advise consumers that use of the platforms could have a negative effect on mental health. In 2024, Colorado became the first state in the nation to pass warning label legislation, requiring timed pop-up warnings about the impacts of social media use on mental health. In 2025, seven states introduced bills seeking to require social media platforms to post such warning labels on their sites. Minnesota became the second state to enact a warning label law when it finalized MN SB 1807 during its special session this year as HF 2. As enacted, the law requires social media platforms to display a warning label that informs users of the potential negative mental health impacts of social media and provides access to mental health resources. Additionally, New York currently has a bill awaiting Governor Hochul’s signature requiring platforms that have addictive feeds, autoplay, infinite scroll, like counts, and/or push notifications to provide a warning label each time the user accesses the platform. Likewise, the California legislature just sent a bill to the governor at the end of September to require platforms to display periodic warnings to certain users of possible negative mental health consequences to minor users.

Bills that do make it to enactment, however, are likely to be challenged in court, and current cases give opponents good cause to be optimistic. Courts in Arkansas and Ohio have both ruled that restricting minor access to social media platforms is a violation of the First Amendment as a content-based restriction on speech and have permanently blocked the state’s laws. Laws in California, Florida, and Georgia have also been temporarily blocked by the courts as potential First Amendment violations, and Louisiana has agreed not to enforce its own law while it is being litigated. Utah attempted to circumvent the legal challenges by repealing its social media law and enacting two pieces of legislation that include remarkably similar provisions in 2024 (HB 464, SB 194), and, as previously mentioned, in 2025, Arkansas did the same (SB 611, SB 612).

These First Amendment claims all assert that imposing a requirement for a user to prove that they satisfy an age requirement in order to access legally protected speech is an unconstitutional burden. While the U.S. Supreme Court did recently rule in favor of a Texas law requiring pornographic websites to use age verification, the narrow holding was premised on the interest of the state in protecting children from “obscene” material. While some research points towards social media use as a cause for decreased mental health or minors, such research is relatively new and lacks a complete consensus. It would be difficult for a state to argue that its interest in protecting children from social media matches that of obscene material. The only minor success proponents of these bills have experienced is in the case of NetChoice v. Fitch, in which the U.S. Supreme Court declined to enjoin Mississippi’s law requiring minors to obtain parental consent before opening social media accounts from going into effect while it works its way through the courts, despite a lower court’s finding that it likely runs afoul of the First Amendment. Notably, however, a concurring opinion by Justice Kavanaugh asserted that, in his view, the plaintiffs were likely to succeed in their First Amendment claims in the end.

With the legal uncertainty of imposing duties on social media platforms and the lack of a formal consensus on how social media influences mental health, not to mention effective ways to mitigate this influence, some states have sought more moderate approaches. Georgia, Massachusetts, and New York all introduced legislation to create a Commission or Advisory Council to study the impact of social media on minors and make policy recommendations. Other states sought to prepare minors for the possible consequences of social media through awareness campaigns or courses. In Indiana, Governor Braun issued an Executive Order this year requiring the Department of Education to create recommendations on how to highlight the harmful effects of excessive cell phone and social media usage on the cognitive development of children and adolescents. Similarly, North Carolina enacted HB 959, requiring that all students receive a course of study on social media and its effects on health once during elementary school, once during middle school, and twice during high school.

In response to the youth mental health crisis, experts have scrambled to come up with unique solutions to combat the possible causes, with many states focusing on social media platforms. The little precedent and lack of research on effective policies have resulted in an extremely diverse range of legislation restricting or modifying minor access to social media. Additionally, this wide variety of policies sits on very uncertain legal ground. States seem to be developing new policies as fast as opponents can challenge them.

Tech policy impacts nearly every company, and state policymakers are becoming increasingly active in this space. MultiState’s team understands the issues, knows the key players and organizations, and we harness that expertise to help our clients effectively navigate and engage on their policy priorities. We offer customized strategic solutions to help you develop and execute a proactive multistate agenda focused on your company’s goals. Learn more about our Tech Policy Practice.

February 12, 2026 | Max Rieper

February 4, 2026 | Max Rieper

January 21, 2026 | Abbie Telgenhof