Technology & Privacy

All of the Comprehensive Privacy Laws That Take Effect in 2026

February 4, 2026 | Max Rieper

February 12, 2026 | Max Rieper

Key Takeaways:

At the end of each year, our policy analysts share insights on the issues that have been at the forefront of state legislatures throughout the year. Here are the big developments and high-level trends we saw in 2025 in the deepfake policy space, plus what you can expect in 2026.

As artificial intelligence (AI) tools grow more sophisticated, they provide more opportunities for misuse. Generative AI models can now create realistic-looking imagery and audio for events that never happened and of people and places that don’t even exist. Lawmakers have struggled in efforts to enact broad regulation of AI, but have had much more success passing legislation narrowly targeted at a form of synthetic media called deepfakes. These bills include measures aimed at preventing the spread of sexual deepfake content as well as misleading political deepfake communications.

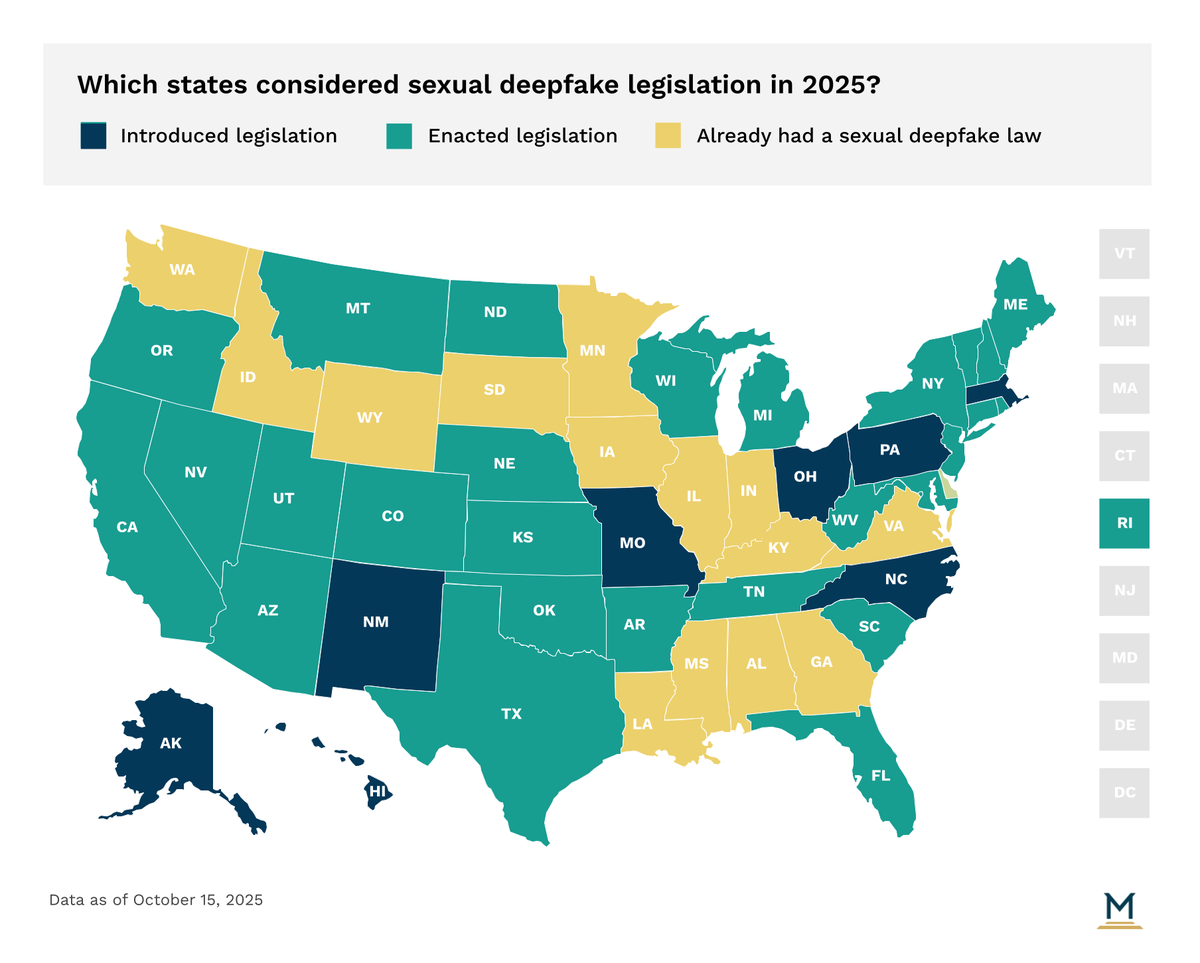

Many generative AI tools allow the manipulation of images and videos in creative ways. But sometimes those tools can also be used to place someone’s likeness in sexual content, causing harm and damaging reputations. Numerous high schools around the country have dealt with a proliferation of sexual deepfakes created of its students. Similarly, lawmakers in every state have introduced some sort of bill addressing the distribution of sexual deepfake content. Some bills address non-consensual sexual deepfakes, others address child sexual abuse material (CSAM), and some address both.

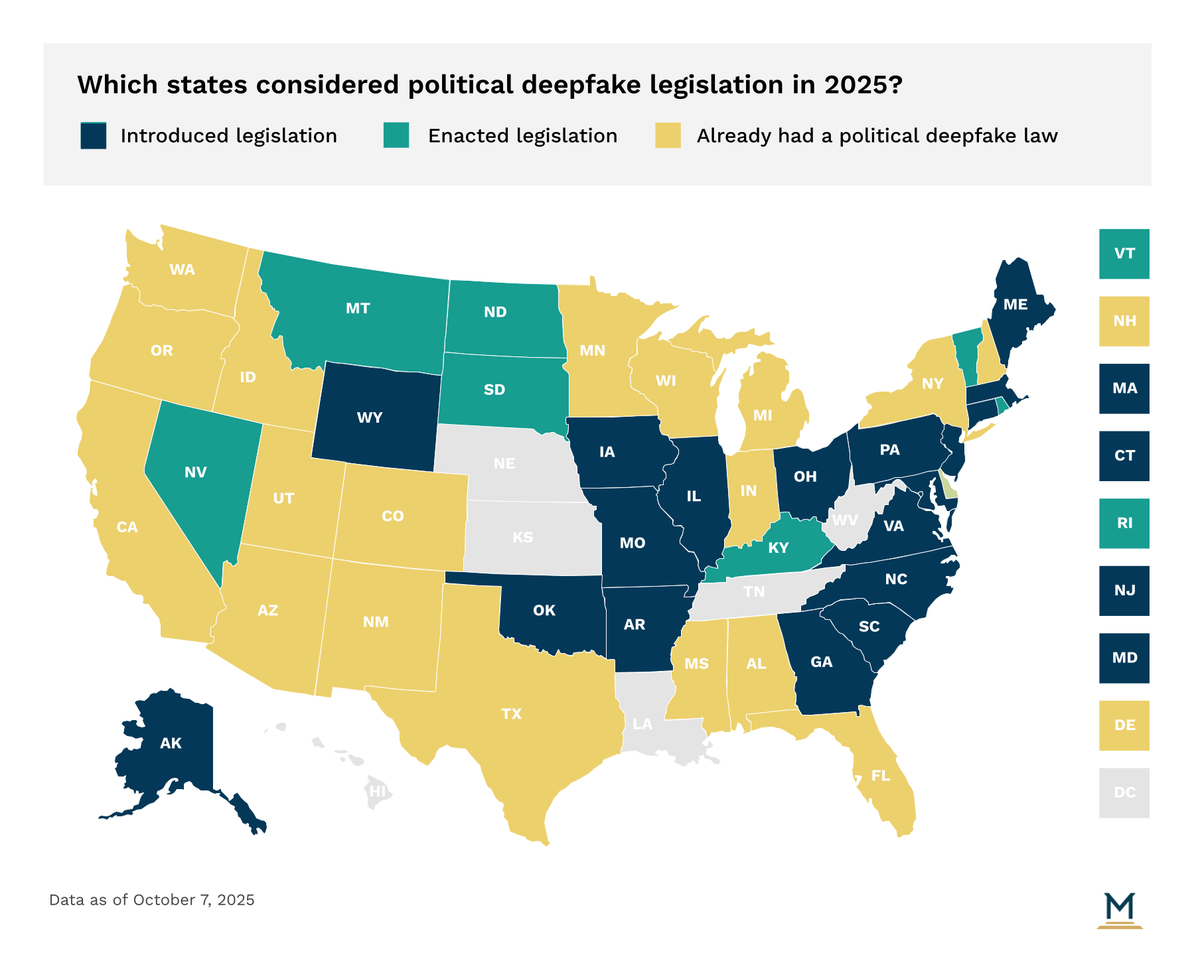

The manipulation of content can also be used in political communications. In 20204, a U.S. Senate candidate created an ad that featured his opponent holding a sign she never held. Lawmakers have sought to require that political ads that include digitally manipulated content include disclaimers to viewers to ensure they are made aware the content is not real.

However, these laws could run up against constitutional protections for free speech. Already, California had its law struck down by a federal judge, in a decision that held that the law was too broad and discriminated based on content, when it could be narrowly tailored to aim at “false speech that causes legally cognizable harms like false speech that actually causes voter interference, coercion, or intimidation.” California also had a law struck down that blocked online platforms from hosting deceptive political deepfake content related to an election.

Looking ahead to 2026, lawmakers are expected to broaden their approach beyond punishing individual creators and distributors of deepfakes to include entities that enable production and dissemination, such as generative AI platforms, payment processors, hosting platforms, and cloud providers. The federal Take it Down Act, passed earlier in 2025, already requires online platforms to take down AI-modified or generated non-consensual sexual content. States could also push bills to require watermarks, digital signatures, or cryptographic provenance tags on AI-generated audio/video, potentially coordinated through standards developed by the National Institute of Standards and Technology (NIST) or the Coalition for Content Provenance and Authenticity (C2PA).

Tech policy impacts nearly every company, and state policymakers are becoming increasingly active in this space. MultiState’s team understands the issues, knows the key players and organizations, and we harness that expertise to help our clients effectively navigate and engage on their policy priorities. We offer customized strategic solutions to help you develop and execute a proactive multistate agenda focused on your company’s goals. Learn more about our Tech Policy Practice.

February 4, 2026 | Max Rieper

January 21, 2026 | Abbie Telgenhof

January 15, 2026 | Abbie Telgenhof