Technology & Privacy

How AI-Generated Content Laws Are Changing Across the Country

February 12, 2026 | Max Rieper

December 19, 2025 | Bill Kramer

Key Takeaways:

President Trump recently signed an executive order (EO) directing the federal government to file lawsuits against and cut funding to states that pass regulations on artificial intelligence (AI). The administration’s ire at state AI regulations has been ongoing through most of 2025, beginning with an attempt during the reconciliation process to preempt state AI laws. That effort was revived recently with talk of adding preemption to the National Defense Authorization Act (NDAA), but both legislative routes failed.

What’s important to remember about an executive order is that it’s not a law, which would be needed to preempt states on an issue. Instead, the EO is essentially a memo from the head of the executive branch, directing executive agencies to carry out current law in a specific way. This order throws the legal version of the kitchen sink at the states to punish them for their AI laws, but it's unclear if we’ll see any real effect in slowing down the interest in regulating AI at the state level (and could very well have the opposite effect).

So what does the EO actually do? First, it threatens the states with lawsuits. It orders the Commerce Department to identify “onerous” state AI laws and creates a Department of Justice “AI Litigation Task Force” to challenge those laws in court. Second, the EO directs Commerce to withhold Broadband Equity Access and Deployment (BEAD) funding from states with “onerous” AI laws and asks other federal agencies to identify other grant programs that can be withheld from states as well. Finally, the order directs the Federal Trade Commission (FTC) to determine whether states have violated FTC regulations by regulating AI.

What state laws are targeted? The only state law specifically referred to in the EO is Colorado’s SB 205, a broad algorithmic discrimination law that passed in 2024 but has yet to go into effect. An earlier version of the EO that leaked a few weeks ago also specifically pointed out California’s new AI safety law, which largely gained support or neutrality from the major developers themselves. The draft order lambasted the California law as “premised on the purely speculative suspicion that AI might ‘pose catastrophic risk.’”

Notably, AI regulation at the state level has largely been a bipartisan effort. Florida Gov. DeSantis (R) recently unveiled an “AI Bill of Rights” legislative package to regulate artificial intelligence and data centers next session. Gov. DeSantis responded to President Trump’s executive order on social media, stating that “an executive order doesn’t/can’t preempt state legislative action.”

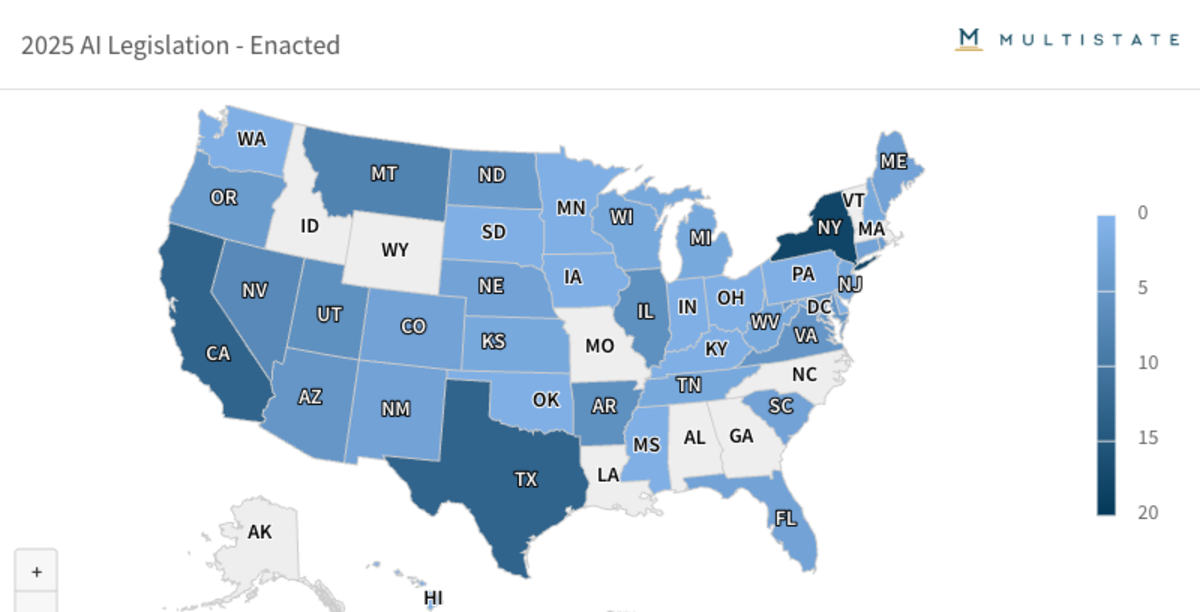

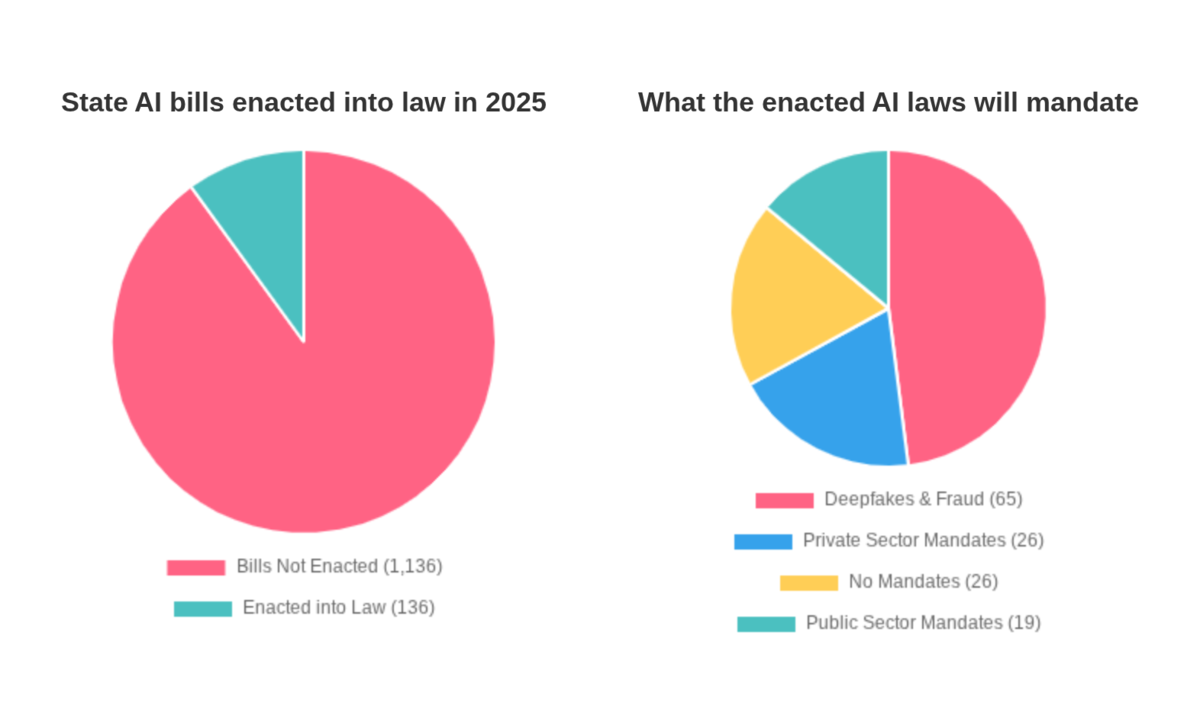

MultiState is tracking over 1,000 bills introduced by state lawmakers in 2025 related to AI. That large number has been touted as a big reason why preemption is needed. But state government affairs professionals know that a small percentage of bills introduced actually become law. In 2025, only 136 of those bills became law, and an analysis from our team at MultiState.ai of those bills found that only a handful would actually impose a broad mandate on developers or deployers of AI, with the vast majority of new laws narrowly focused on deepfakes, fraud, and public sector use of AI, or imposing no mandates at all.

That’s not to say that there aren’t major issues with many of the state-level proposals. But the policymaking process on the state level can discover and correct policy mistakes effectively. The Colorado law is a good example. During 2025's special session, Colorado lawmakers delayed the law from going into effect until June 2026, and it’s widely believed that the law as currently written will not go into effect, and that its provisions will be pared back with an emphasis on transparency over impact assessments. Colorado’s experience is one reason that no other state enacted a similar law in 2025 despite momentum at the start of 2025. The 2024 version of the California AI safety bill was vetoed by Gov. Newsom, but the 2025 bill’s sponsor worked with opponents of the previous bill and built a consensus to get the law past the finish line.

Would one national standard be preferable to states experimenting with different laws? Of course, but while the EO repeatedly refers to a “national policy framework for AI” — the reality is that no federal regulatory framework exists. Despite proposals from thought leaders outside of government, a regulatory framework has yet to be presented by congressional leadership, and there’s skepticism about whether Congress could come to an agreement on a meaningful framework to regulate AI at the federal level.

When the draft EO initially leaked in November 2025, we suspected that it was intended as leverage to force preemption into the NDAA. That didn’t work, and a congressional solution is not imminent. But considering that each of the three main tools this EO will use to put pressure on the states are on shaky legal grounds, We don’t anticipate much immediate effect. Ultimately, there will be plenty of lawsuits challenging the EO, but any policy effects will be significantly delayed if they materialize at all. In a mid-term election year, It's even possible that we could see state lawmakers becoming more aggressive on AI regulations to distance themselves from an unpopular president.

This article appeared in our Morning MultiState newsletter on December 16, 2025. For more timely insights like this, be sure to sign up for our Morning MultiState weekly morning tipsheet. We created Morning MultiState with state government affairs professionals in mind — sign up to receive the latest from our experts in your inbox every Tuesday morning. Click here to sign up.

February 12, 2026 | Max Rieper

February 4, 2026 | Max Rieper

January 21, 2026 | Abbie Telgenhof